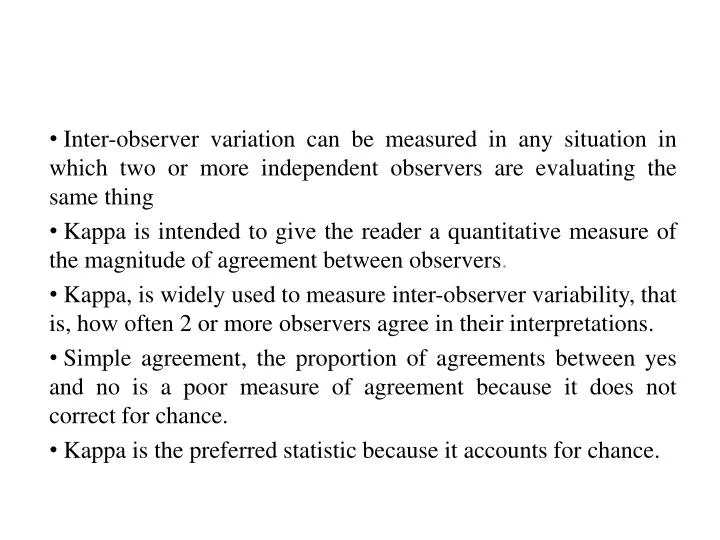

Pitfalls in the use of kappa when interpreting agreement between multiple raters in reliability studies

![PDF] More than Just the Kappa Coefficient: A Program to Fully Characterize Inter-Rater Reliability between Two Raters | Semantic Scholar PDF] More than Just the Kappa Coefficient: A Program to Fully Characterize Inter-Rater Reliability between Two Raters | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/79de97d630ca1ed5b1b529d107b8bb005b2a066b/2-Figure2-1.png)

PDF] More than Just the Kappa Coefficient: A Program to Fully Characterize Inter-Rater Reliability between Two Raters | Semantic Scholar

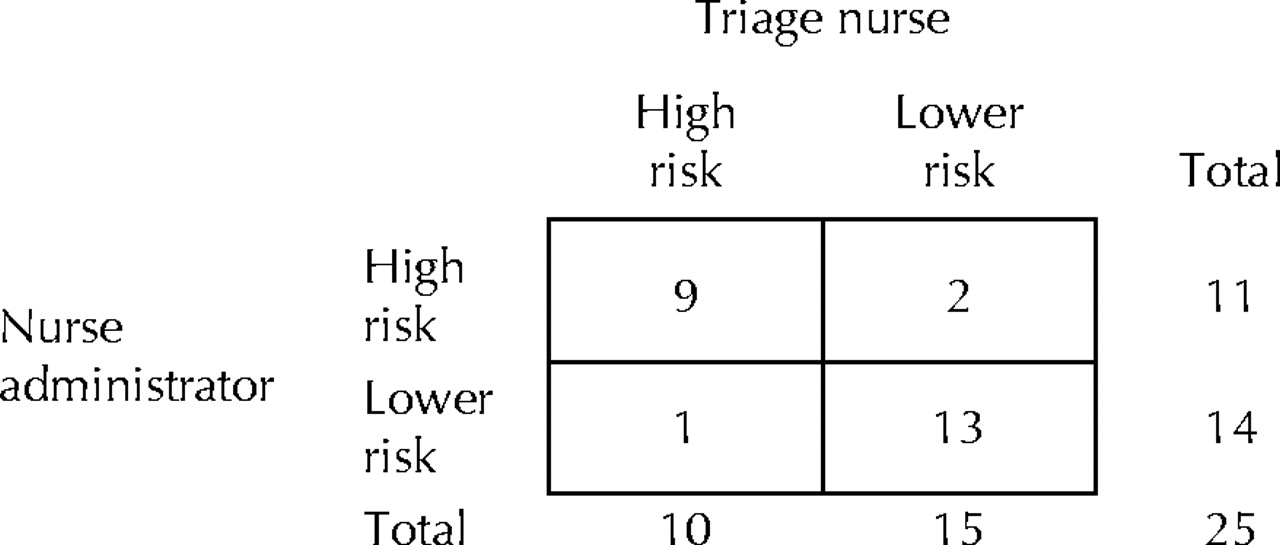

![PDF] The kappa statistic in reliability studies: use, interpretation, and sample size requirements. | Semantic Scholar PDF] The kappa statistic in reliability studies: use, interpretation, and sample size requirements. | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/6d3768fde2a9dbf78644f0a817d4470c836e60b7/3-Table1-1.png)

PDF] The kappa statistic in reliability studies: use, interpretation, and sample size requirements. | Semantic Scholar

Comparing dependent kappa coefficients obtained on multilevel data - Vanbelle - 2017 - Biometrical Journal - Wiley Online Library

Agree or Disagree? A Demonstration of An Alternative Statistic to Cohen's Kappa for Measuring the Extent and Reliability of Ag

Symmetry | Free Full-Text | An Empirical Comparative Assessment of Inter-Rater Agreement of Binary Outcomes and Multiple Raters

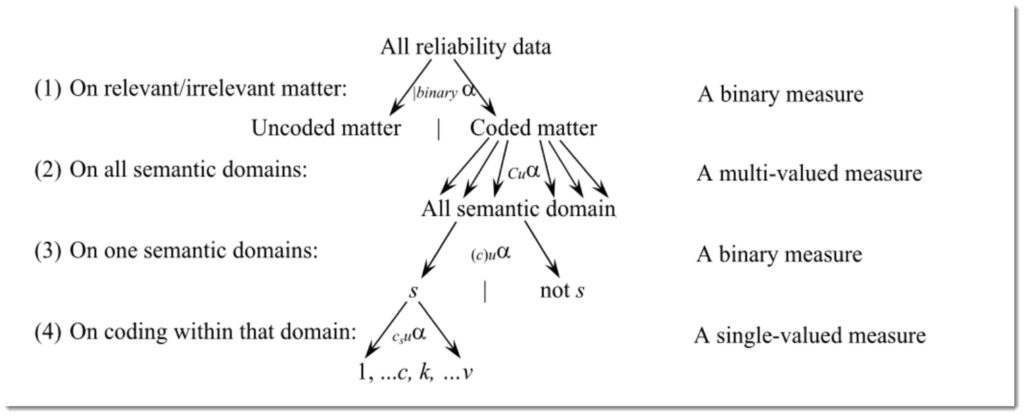

242-2009: More than Just the Kappa Coefficient: A Program to Fully Characterize Inter-Rater Reliability between Two Raters

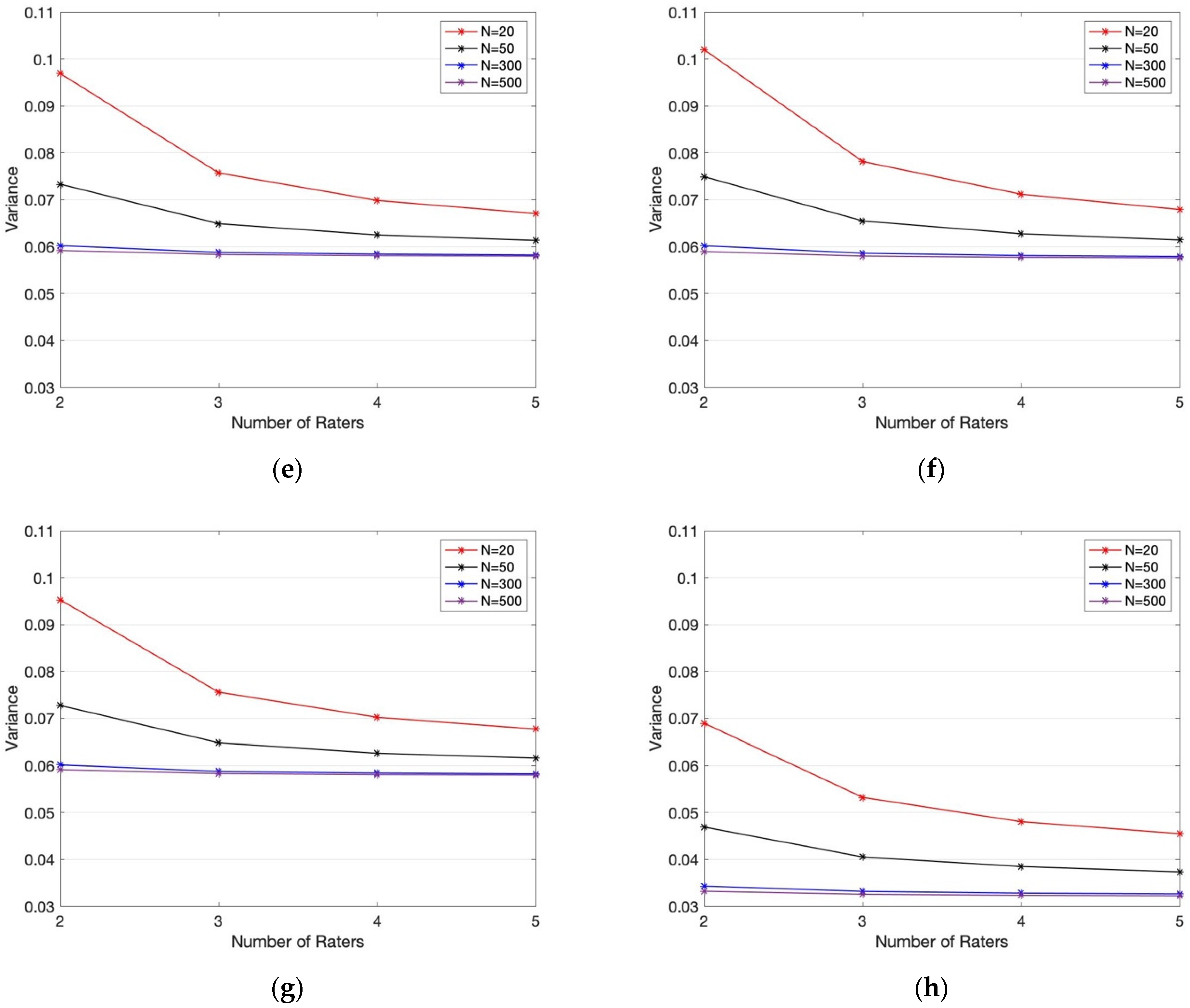

PDF) Sequentially Determined Measures of Interobserver Agreement (Kappa) in Clinical Trials May Vary Independent of Changes in Observer Performance

Agree or Disagree? A Demonstration of An Alternative Statistic to Cohen's Kappa for Measuring the Extent and Reliability of Ag

Explaining the unsuitability of the kappa coefficient in the assessment and comparison of the accuracy of thematic maps obtained by image classification - ScienceDirect

![PDF] More than Just the Kappa Coefficient: A Program to Fully Characterize Inter-Rater Reliability between Two Raters | Semantic Scholar PDF] More than Just the Kappa Coefficient: A Program to Fully Characterize Inter-Rater Reliability between Two Raters | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/79de97d630ca1ed5b1b529d107b8bb005b2a066b/1-Figure1-1.png)